This subject can easily fly off in different tangents but

I wanted to give it a go so that we can all learn from our collective experiences

within our many paths to produce quality music and beyond.

I have always felt the gap between what audiophiles require and

what music production engineers are working with a huge source

dichotomy in the two camps. Can they coexist? Why haven’t they in the past?

Are there ongoing efforts to bridge the gap?

I believe there’s a distinction between the person who takes their music

listening seriously and the engineer who works within the confines of

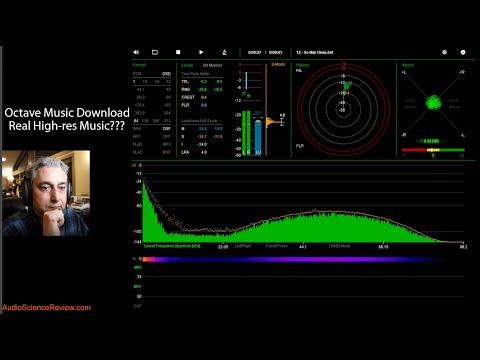

their industry niche. For example, CD quality is 44.1khz at 16 bit while

people who work in the AV industry, video types stay within their 48khz

world for an “industry standard” solution. While the audiophile these days

are looking into higher resolution audio, 96khz, 176khz, 192khz at 24 bit

or even a 32 bit floating process! Those are some staggering bit rates that

are a long way from home considering CDs just a few years ago were

all the rage.

So why hasn’t there been a push by working engineers to jump on board

a higher than thou resolution rocketship? What’s the hold up? Who is

dictating the dummying down of high res audio resources to the masses?

There seems to be two camps in this venture. The believers and the unbelievers.

The unbelievers state that there is no difference if product is upsampled

from a lower resolution to a higher power. Can the average person tell the difference?

Well, if that’s our criteria then we deserve what we get. But a swift dismissal

is not the end of the story. There is a difference, a huge difference when a musical piece is mixed and the two buss is captured at a high res master.

The piece of the puzzle IMO is that even though let’s say the individual instrument

tracks were recorded on a DAW that had 48khz/24. So if we layed downa two track master at the same rate, we are now compounding the limitations of said

bitrate onto that music. Whereas if we were to mix the song down to a better

hi res mix, 192/32, then we have eliminated the bad limitaions that are

presented when audio equipment is forced to apply limits such as 44.1 or 48khz

to an audio or musical piece. The hi res itself doesn’t really change anything

in the recording process. The mic is still the mic, the cable is still the cable,

but how we limit it’s input to fit 44.1 etc. is the key. Those filters and limiters

have introduced frequency hash and garbage into our recording! We might not

be able to hear it, or should I say distinguish it as noise etc. but it is inherent

in lower res recordings and it does kill a certain clarity within one’s mix.

The sooner we escape the clutches of lower res philosophy being just okay

for the masses, the better off we all will be.

Yes, there are DAWs and Interfaces that can handle higher resolutions

right here, right now! C’mon!