I did a quick search to see if there was any ground-breaking technology that was being unveiled. Nope. I found this.

Then three other companies doing the same thing

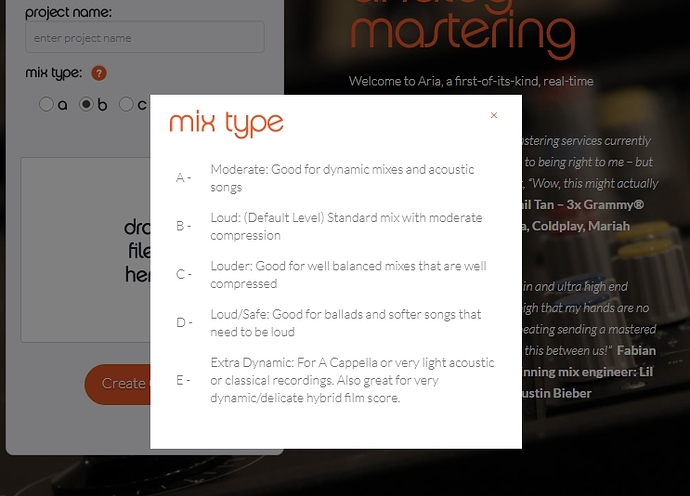

I’m sorry, but I don’t think we’re gonna get to hear this head to head with a pro mastering engineer. I can’t see how someone who needs a pro ME has any use for that aria service.

Avid, Moog, Empirical Labs, Yamaha, Dangerous, Harrison, Studer, Neve…all those guys have analog circuitry that is digitally controlled. I imagine you could find or possibly build some analog gear that linked to an open source control mechanism that could respond to a set of conditions??? But OMG does that sound like an insane amount of work. The first thing I thought of was my Dangerous 2Bus summing mixer. Where you change the sound based on how hard you push the signal going in and find a sweet spot like that. But there’s several issues. First of all, it doesn’t really do anything. It just kind of sits there and warms things up. But it would be incredibly easy to integrate into an AI based rig.

It really provides more a workflow advantage though than it does a sonic advantage. I love what it does, but it doesn’t do all that much.

Thats one of the things that I think they could actually automate easily. Oh!! Now that I think of it, if I had a DAW that could talk to a Euconized AI, I think you could do a mastering job like this with Avid hardware. The reason is that Avid makes true analog hardware in which every parameter is digitally controlled. So on Avids compressors have a 100% analog signal path, but pro tools can send automation signals to adjust the attack, decay, knee, sustain, release, slope, then it can feed 2 compressor in series, or re-align them in parallel. The compressors are very very clean, as are their EQ’s and preamps. Hmmmm…that is a fascinating question.

Agreed. I doubt that Aria process is anything even close to what we’re imagining it is.

??? But you’d be in your room, with your monitors. That’s half the problem, and the other is that I wouldn’t really know what to do with a mastering rig even if I had one.

Jim: My pod malfunctioned

Arthur (AI): ??? Impossible. Does not compute!

Musican: My Pod HD malfunctioned

Guitar Center: Great! We’ll sell you our Pro Coverage warranty on your next one!

Computers don’t know how to bluff.

Computers don’t know how to bluff.