First off, I feel like writing today! Its the best way for me to get over my stage fright and lockdown anxiety and it works.

And todays topic is loudness

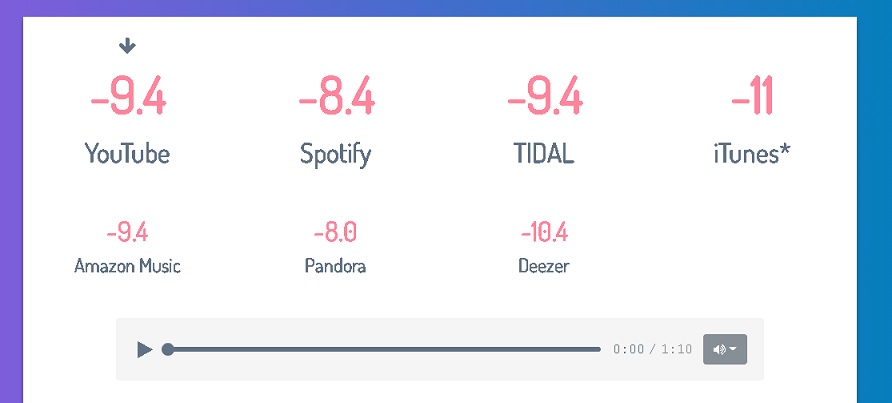

We might be hearing a lot of discussions revolving around Loudness Penalty for streaming.

While its true that streaming platforms slash the volumes down but its not all bad news. All in all it gets our mixing juices flowing and gets us to focus more on the music because platforms are in a way giving everyone a common ground.

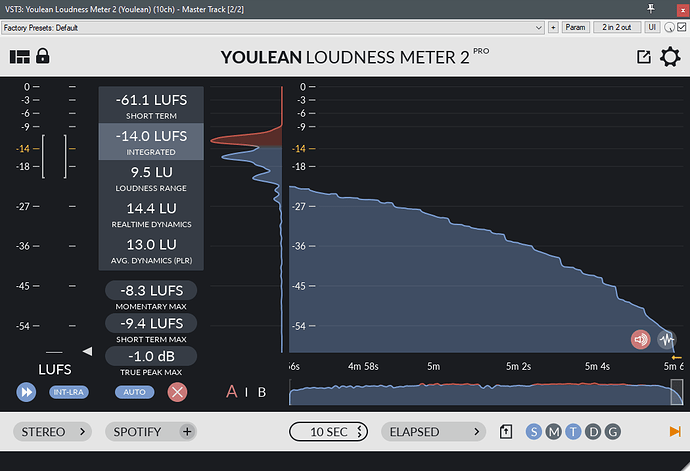

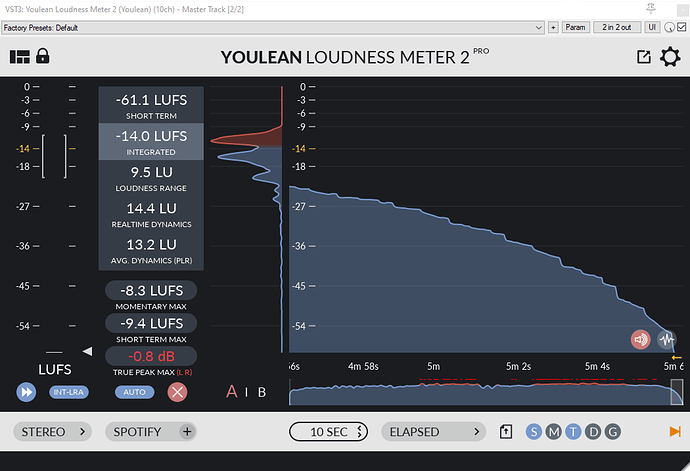

First things first, imo dont use the loudness guide literally. Even the loudness meter designers say that.

Next step is in understanding the loudness.

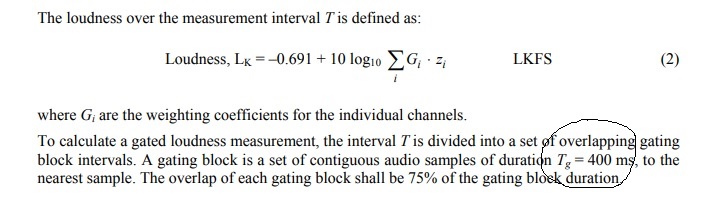

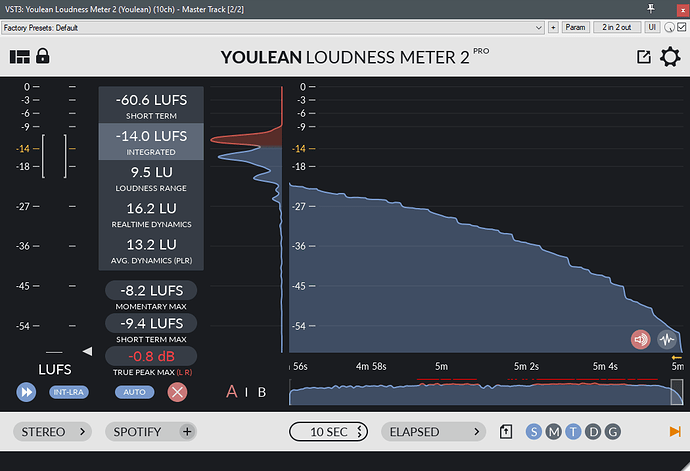

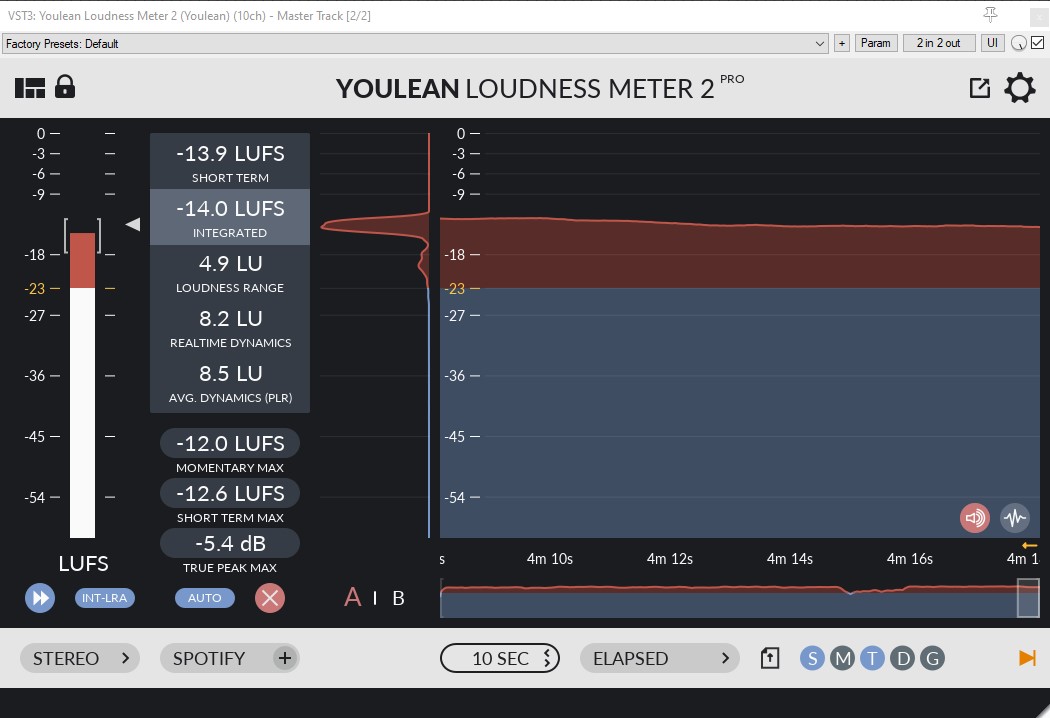

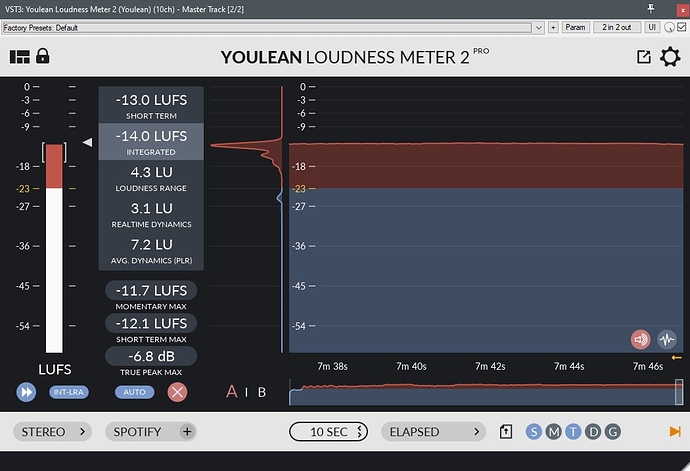

Loudness for LUFS or LKFS metering system used by most streaming platforms use this formula:

Meaning loudness is measured in roughly half-second chunks (400 miliseconds of audio). Overlapping ater 100 miliseconds. The more overlapping high peaks and transients you have within that interval, and number of such occurrences in your song, the more loud your mix will be considered.

Now that we know the formula next is how do we beat it right ?? Spoken like a true hacker lol

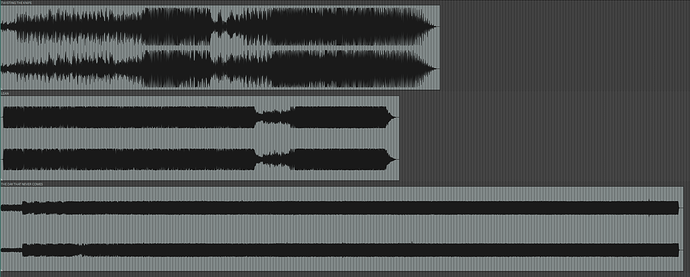

Half a second is an eternity in sound mixing. While this is bad news for fast tempo songs and good news for slow tempo songs, it doesn’t mean that we all start writing slow ballads  … but what we can do is identify if we are or have been overdoing some things in practice. For example in a fast tempo song where a loud kick or snare spreads may be lasting longer than 400 ms, overlapping with several other samples and adding up to a whopping penalty. Then streaming platforms will just turn your mix down even if it doesn’t sound loud and you can say goodbye to your quieter parts (if you have any!).

… but what we can do is identify if we are or have been overdoing some things in practice. For example in a fast tempo song where a loud kick or snare spreads may be lasting longer than 400 ms, overlapping with several other samples and adding up to a whopping penalty. Then streaming platforms will just turn your mix down even if it doesn’t sound loud and you can say goodbye to your quieter parts (if you have any!).

So how do we beat it?? and do we need to?

Groove it!

May be we can consider a groove instead. Hit hard in the first measure, hit a bit softer in the 2nd measure and so far. Then when needed, we can thump those double kicks in quick bursts. If not It might make us a bit better song writer in general. When we groove our loud parts, it not only fools the algorithm but it might create more emotion instead.

Move it!

Focus on the dynamics of the rich ear sensitive mid-high section. The more rich dynamics you have in this range, the more movement your listener will feel while listening during commuting or workouts where mind isnt really focused on grabbing the entire picture of the soundscape and the finer details.

Delay it!

In streaming versions, the most common data that is lost is ambiance, reverb and stereo spread.

Even though we can stream 386 kbps on youtube on a pc, on most commuter phones HD is turned off and you are lucky to stream 128 kbps of sound quality. Thats where these shenanigans will be lost.

Doesn’t mean we stop using our nice reverbs, just consider using more dry delays that arent lost in the low quality versions for a similar effect. Then you can still top it off with nice reverb… or as CLA does it with 10 different reverbs!  Though CLA Echosphere is a decent plugin.

Though CLA Echosphere is a decent plugin.

Think it through!

Last but not the least, lets focus on our goal as a song writer producer:

We need to understand our audience and write music for them instead of getting bogged down with geeky details. Good music and good arrangement will sound good even if heard in mono and a subpar, unhyped up mix.

References (International Telecommunication Union) Whitepaper about LUFS

R-REC-BS.1770-3-201208-S!!PDF-E.pdf (1.5 MB)